-

Features & Use Cases

- Features

- Overview

- Specific Use Cases

- HPC cluster

- Hadoop cluster

- Download

-

Support

- Documentation

- Quick Start

- User Guide

- FAQs

- Support

- Mailing List

- Contribute

- About

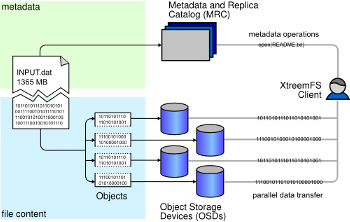

XtreemFS employs the object-based file system concept. File metadata like the filename, owner and the directory tree are stored on a metadata server (MRC). The content of files is split into fixed size chunks of data (the objects); these objects are stored on the object storage servers (OSDs). The size of the objects is fixed per file but may differ between files. This separation of metadata and file content allows us to scale the storage capacity and bandwidth by adding storage servers (OSD) as needed.

The directory service (DIR) servers as a central registry which the XtreemFS services use to find their peers. It is also used by the client to find the MRC storing the volume mounted by the user.

The XtreemFS client is an active component of our system. It translates the file system calls into requests to the MRCs and OSDs in the installation.

XtreemFS supports striping of file content. Different parts of a striped file are handled by different OSDs. Striping can substantially increase read/write throughput, since read and write requests for a single file can be processed by multiple OSDs in parallel. The client ensures that read and write requests for a file are translated to read and write requests at object granularity. Depending on the striping pattern, object requests are then transmitted to the respective OSDs.

For a detailed description of the problems with POSIX semantics and striping as well as our solution, please read our paper on "Striping without Sacrifices" [PDF].

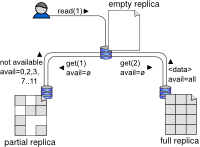

XtreemFS can improve availability, data safety and access times by means of file replication. At its current state, XtreemFS supports replication of read-only file content. Read-only replication requires a file to be explicitly marked as 'read-only' as soon as the file content is no longer supposed to be altered. Once this is done, new replicas can be added.

Replica locations are part of the file's metadata. When opening a file, the client retrieves a list of all replicas, each replica comprising a striping policy and a list of OSDs. In order to read data from a replicated file, a client may contact OSDs from any of the replicas. Thus, fail-over strategies can be implemented by contacting a different OSD with if an OSD does not respond in a timely manner.

Replica consistency is automatically ensured among the OSDs. In response to a read request, an OSD first checks if the corresponding object is locally available. If not, the object is replicated from a remote OSD. Since mulitple remote OSDs may have a replica of the object, a transfer strategy defines which one to choose. As soon as the object data has been fetched, the OSD stores it locally while sending a response to the client. Thus, missing objects of a file replica are filled with data on demand.

Newly created replicas of a file are initially empty, i.e. they do not contain any data. As an alternative to on-demand object fetching, data can also be replicated immediately when the replica is created.

With future versions of XtreemFS, we aim for full read-write replication of file content as well as metadata. We rely on a master-slave scheme to ensure replica consistency: changes are only accepted by a designated master replica and propagated to the remaining slave replicas. In order to ensure system operability in face of master failures, we use a fail-over mechanism based on leases. A replica needs to acquire a lease to become the master replica. Leases are only valid for a certain span of time, which guarantees that crashed master replicas will eventually lose the master status and allow a different replica to become the master. To make sure that all replicas agree on the same master, a lease negotiation protocol based on the Paxos algorithm is used. For further details, please refer to our paper on Paxos-based lease negotiation [PDF].

To efficiently maintain large amounts of file system metadata that may outgrow the main memory of an MRC, we developed BabuDB, a lightweight key-value store. BabuDB has been specifically tailored to the needs of a file system metadata server. Besides offering a decent performance even with large numbers of files and directories, its LSM-Tree-based design allows it to efficiently handle short-lived files. Moreover, it inherently supports snapshots of file system metadata, which will be used in future versions of XtreemFS to create backups and instantaneous snapshots of a volume.

First measurements have shown that our BabuDB-based MRC outperforms an equivalent MRC implementation that is based on a local ext4 file system, as well as one that is based on Berkeley DB for Java. Please visit http://babudb.googlecode.com for more information on BabuDB.